遇到问题:

Linux服务器主板故障,更换完新主板后,发现服务器上的管理ip不通了,业务ip和存储ip正常。

分析原因:

1、从服务器的业务ip通过ssh登录上去检查

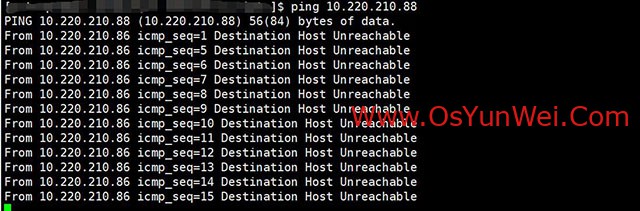

1.1ping同一网段的其它管理ip地址,发现确实不通

1.2使用ip addr命令查看ip地址信息

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 70:fd:45:7d:70:96 brd ff:ff:ff:ff:ff:ff

3: ens1f0: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond2 state UP group default qlen 1000

link/ether cc:05:77:c8:15:23 brd ff:ff:ff:ff:ff:ff

4: eno2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 70:fd:45:7d:70:97 brd ff:ff:ff:ff:ff:ff

5: ens1f1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1450 qdisc mq master bond1 state UP group default qlen 1000

link/ether cc:05:77:c8:15:22 brd ff:ff:ff:ff:ff:ff

6: eno3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 70:fd:45:7d:70:98 brd ff:ff:ff:ff:ff:ff

7: eno4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 70:fd:45:7d:70:99 brd ff:ff:ff:ff:ff:ff

8: bond2: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether cc:05:77:c8:15:23 brd ff:ff:ff:ff:ff:ff

inet 10.220.245.86/27 brd 10.220.245.95 scope global noprefixroute bond2

valid_lft forever preferred_lft forever

inet6 fe80::ce05:77ff:fec8:1523/64 scope link

valid_lft forever preferred_lft forever

9: bond1: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether cc:05:77:c8:15:22 brd ff:ff:ff:ff:ff:ff

inet 10.230.173.60/28 brd 10.230.173.63 scope global dynamic noprefixroute bond1

valid_lft 857042sec preferred_lft 857042sec

inet6 2409:8004:5cc0:bb01:0:ae6:ad30:5/128 scope global dynamic noprefixroute

valid_lft 97602sec preferred_lft 97602sec

inet6 fe80::ce05:77ff:fec8:1522/64 scope link noprefixroute

valid_lft forever preferred_lft forever

10: bond0: <NO-CARRIER,BROADCAST,MULTICAST,MASTER,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether cc:05:77:c8:15:24 brd ff:ff:ff:ff:ff:ff

inet 10.220.210.86/26 brd 10.220.210.127 scope global noprefixroute bond0

valid_lft forever preferred_lft forever

从ip addr输出可以看到当前状态:

bond1: UP状态正常

bond2: UP状态正常

ens1f1: SLAVE状态,绑定到bond1

ens1f0: SLAVE状态,绑定到bond2

bond0:DOWN状态异常

eno3: UP状态正常,但未绑定到任何bond网卡

eno4: UP状态正常,但未绑定到任何bond网卡

eno1: UP状态正常,但未绑定到任何bond网卡

eno2: UP状态正常,但未绑定到任何bond网卡

从上面的信息可以判断,服务器上总共有6张网卡,并且还做了网卡聚合,多个物理网卡聚合成一个逻辑接口,bond0、bond1、bond2 就是聚合接口。

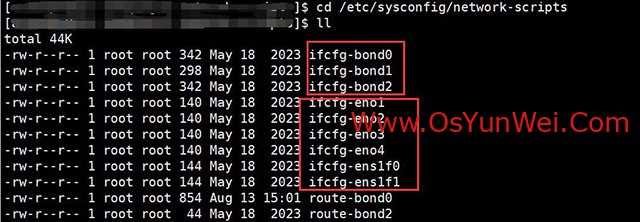

1.3继续查看网卡端口信息

cd /etc/sysconfig/network-scripts

cat ifcfg-bond0

DEVICE=bond0

NAME=bond0

TYPE=Bond

BONDING_MASTER=yes

IPADDR=10.220.210.86

GATEWAY=10.220.210.65

NETMASK=255.255.255.192

ONBOOT=yes

BOOTPROTO=static #Maybe use dhcp

IPV4_ROUTE_METRIC=1000

NM_CONTROLLED=yes

PEERDNS=no

MACADDR=cc:05:77:c8:15:24

IPV6INIT=no

IPV6ADDR=

IPV6_DEFAULTGW=

DHCPV6C=no

BONDING_OPTS='mode=4 miimon=100 use_carrier=1'

cat ifcfg-bond1

DEVICE=bond1

NAME=bond1

TYPE=Bond

BONDING_MASTER=yes

IPADDR=

GATEWAY=

NETMASK=

ONBOOT=yes

BOOTPROTO=dhcp #Maybe use dhcp

IPV4_ROUTE_METRIC=0

NM_CONTROLLED=yes

PEERDNS=no

MACADDR=cc:05:77:c8:15:22

IPV6INIT=yes

IPV6ADDR=

IPV6_DEFAULTGW=

DHCPV6C=yes

BONDING_OPTS='mode=4 miimon=100 use_carrier=1'

cat ifcfg-bond2

DEVICE=bond2

NAME=bond2

TYPE=Bond

BONDING_MASTER=yes

IPADDR=10.220.245.86

GATEWAY=10.220.245.65

NETMASK=255.255.255.224

ONBOOT=yes

BOOTPROTO=static #Maybe use dhcp

IPV4_ROUTE_METRIC=2000

NM_CONTROLLED=yes

PEERDNS=no

MACADDR=cc:05:77:c8:15:23

IPV6INIT=no

IPV6ADDR=

IPV6_DEFAULTGW=

DHCPV6C=no

BONDING_OPTS='mode=4 miimon=100 use_carrier=1'

cat ifcfg-eno1

DEVICE=eno1

NAME=bond1-slave-eno1

HWADDR=cc:05:77:c8:15:22

TYPE=Ethernet

BOOTPROTO=none

ONBOOT=yes

MASTER=bond1

SLAVE=yes

NM_CONTROLLED=yes

cat ifcfg-eno2

DEVICE=eno2

NAME=bond2-slave-eno2

HWADDR=cc:05:77:c8:15:23

TYPE=Ethernet

BOOTPROTO=none

ONBOOT=yes

MASTER=bond2

SLAVE=yes

NM_CONTROLLED=yes

cat ifcfg-eno3

DEVICE=eno3

NAME=bond0-slave-eno3

HWADDR=cc:05:77:c8:15:24

TYPE=Ethernet

BOOTPROTO=none

ONBOOT=yes

MASTER=bond0

SLAVE=yes

NM_CONTROLLED=yes

cat ifcfg-eno4

DEVICE=eno4

NAME=bond0-slave-eno4

HWADDR=cc:05:77:c8:15:25

TYPE=Ethernet

BOOTPROTO=none

ONBOOT=yes

MASTER=bond0

SLAVE=yes

NM_CONTROLLED=yes

cat ifcfg-ens1f0

DEVICE=ens1f0

NAME=bond2-slave-ens1f0

HWADDR=cc:05:77:ee:1e:51

TYPE=Ethernet

BOOTPROTO=none

ONBOOT=yes

MASTER=bond2

SLAVE=yes

NM_CONTROLLED=yes

cat ifcfg-ens1f1

DEVICE=ens1f1

NAME=bond1-slave-ens1f1

HWADDR=cc:05:77:ee:1e:52

TYPE=Ethernet

BOOTPROTO=none

ONBOOT=yes

MASTER=bond1

SLAVE=yes

NM_CONTROLLED=yes

从上面的信息可以分析出端口聚合配置是:

管理bond0:物理网卡eno3+eno4

业务bond1:物理网卡eno1+ens1f1

存储bond2:物理网卡eno2+ens1f0

1.4查看6张物理网卡的当前MAC地址

#查看网卡的硬件MAC地址

ethtool -P eno1

ethtool -P eno2

ethtool -P eno3

ethtool -P eno4

ethtool -P ens1f0

ethtool -P ens1f1

ethtool -P eno1

Permanent address: 70:fd:45:7d:70:96

ethtool -P eno2

Permanent address: 70:fd:45:7d:70:97

ethtool -P eno3

Permanent address: 70:fd:45:7d:70:98

thtool -P eno4

Permanent address: 70:fd:45:7d:70:99

ethtool -P ens1f0

Permanent address: cc:05:77:ee:1e:51

ethtool -P ens1f1

Permanent address: cc:05:77:ee:1e:52

从上面的信息可以分析得到:

物理网卡eno1、eno2、eno3、eno4的MAC地址和网卡配置文件里面的MAC地址不一致。

物理网卡ens1f0、ens1f1的MAC地址和网卡配置文件里面的MAC地址一致。

由于是服务器更换主板后出现的问题,由此可以判断出物理网卡eno1、eno2、eno3、eno4是服务器板载网卡。

主板更换后,板载网卡也一起更换了,MAC地址也就发生了变化,而配置文件里面还是旧的MAC地址。

物理网卡ens1f0和ens1f1单独的硬件,更换主板不影响MAC地址。

管理bond0:物理网卡eno3+eno4聚合,这2个网卡MAC地址都变了,所以bond0网络不通了。

业务bond1:物理网卡eno1+ens1f1聚合,ens1f1这个MAC地址未发生变化,所以bond1还可以通,失去了高可用。

存储bond2:物理网卡eno2+ens1f0聚合,ens1f0这个MAC地址未发生变化,所以bond2还可以通,失去了高可用。

解决办法:

1、修改物理网卡eno1、eno2、eno3、eno4配置文件里面的HWADDR地址为当前的真实MAC地址。

cd /etc/sysconfig/network-scripts

sed -i 's/^HWADDR=.*/HWADDR=70:fd:45:7d:70:96/' ifcfg-eno1

sed -i 's/^HWADDR=.*/HWADDR=70:fd:45:7d:70:97/' ifcfg-eno2

sed -i 's/^HWADDR=.*/HWADDR=70:fd:45:7d:70:98/' ifcfg-eno3

sed -i 's/^HWADDR=.*/HWADDR=70:fd:45:7d:70:99/' ifcfg-eno4

2、重启bond0

nmcli con reload #重新加载配置文件

nmcli con down bond0 #停止bond0

nmcli con up bond0 #重新激活bond0

#重启网络

systemctl restart NetworkManager

3、查看 bond0 接口详细状态信息

cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2 (0)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Peer Notification Delay (ms): 0

802.3ad info

LACP rate: slow

Min links: 0

Aggregator selection policy (ad_select): stable

System priority: 65535

System MAC address: cc:05:77:c8:15:24

Active Aggregator Info:

Aggregator ID: 1

Number of ports: 2

Actor Key: 9

Partner Key: 13

Partner Mac Address: 90:5d:7c:ed:bc:e8

Slave Interface: eno3

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 70:fd:45:7d:70:98

Slave queue ID: 0

Aggregator ID: 1

Actor Churn State: none

Partner Churn State: none

Actor Churned Count: 0

Partner Churned Count: 0

details actor lacp pdu:

system priority: 65535

system mac address: cc:05:77:c8:15:24

port key: 9

port priority: 255

port number: 1

port state: 61

details partner lacp pdu:

system priority: 32768

system mac address: 90:5d:7c:ed:bc:e8

oper key: 13

port priority: 32768

port number: 29

port state: 61

Slave Interface: eno4

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 70:fd:45:7d:70:99

Slave queue ID: 0

Aggregator ID: 1

Actor Churn State: none

Partner Churn State: none

Actor Churned Count: 0

Partner Churned Count: 0

details actor lacp pdu:

system priority: 65535

system mac address: cc:05:77:c8:15:24

port key: 9

port priority: 255

port number: 2

port state: 61

details partner lacp pdu:

system priority: 32768

system mac address: 90:5d:7c:ed:bc:e8

oper key: 13

port priority: 32768

port number: 71

port state: 61

4、测试网络是否正常

优化建议:

绑定多个网卡为一个虚拟接口

不要设置网卡配置文件里面的HWADDR的地址

不要设置虚拟接口配置文件里面的MACADDR地址

让系统自动分配HWADDR和MACADDR

可以避免网卡更换无法启动网络

至此,Linux服务器更换物理网卡后网络不通问题解决办法完成。

②190706903

②190706903  ③203744115

③203744115