问题描述:

线上5节点的clickhouse集群,其中有1个节点服务器内存坏掉了,导致服务器无故重启,更换新的内存启动服务器后,clickhouse服务无法启动,节点故障。

排查解决:

1、登录故障节点服务器,开启clickhouse日志

vi /etc/clickhouse-server/config.xml

<log>/data/server/clickhouse/log/clickhouse-server.log</log> #主日志文件,记录 ClickHouse 的运行日志(包括启动、查询、加载表等)

<errorlog>/data/server/clickhouse/log/clickhouse-server.err.log</errorlog> #错误日志文件,记录严重错误、异常堆栈等信息

:wq! #保存退出

chmod 755 /etc/clickhouse-server -R #设置权限

2、启动并查看最新的日志

systemctl stop clickhouse-server #关闭

systemctl start clickhouse-server #启动服务

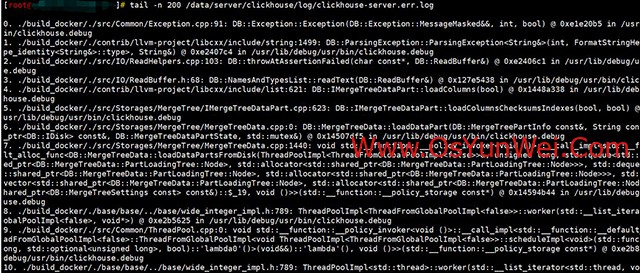

tail -n 200 /data/server/clickhouse/log/clickhouse-server.err.log #查看最新的200条错误日志记录

#日志如下

7. ./build_docker/./src/Storages/MergeTree/MergeTreeData.cpp:1440: void std::__function::__policy_invoker<void ()>::__call_impl<std::__function::__default_alloc_func<DB::MergeTreeData::loadDataPartsFromDisk(ThreadPoolImpl<ThreadFromGlobalPoolImpl<false>>&, unsigned long, std::queue<std::vector<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>, std::allocator<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>>>, std::deque<std::vector<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>, std::allocator<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>>>, std::allocator<std::vector<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>, std::allocator<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>>>>>>&, std::shared_ptr<DB::MergeTreeSettings const> const&)::$_19, void ()>>(std::__function::__policy_storage const*) @ 0x14594b44 in /usr/lib/debug/usr/bin/clickhouse.debug

8. ./build_docker/./base/base/../base/wide_integer_impl.h:789: ThreadPoolImpl<ThreadFromGlobalPoolImpl<false>>::worker(std::__list_iterator<ThreadFromGlobalPoolImpl<false>, void*>) @ 0xe2b5625 in /usr/lib/debug/usr/bin/clickhouse.debug

9. ./build_docker/./src/Common/ThreadPool.cpp:0: void std::__function::__policy_invoker<void ()>::__call_impl<std::__function::__default_alloc_func<ThreadFromGlobalPoolImpl<false>::ThreadFromGlobalPoolImpl<void ThreadPoolImpl<ThreadFromGlobalPoolImpl<false>>::scheduleImpl<void>(std::function<void ()>, long, std::optional<unsigned long>, bool)::'lambda0'()>(void&&)::'lambda'(), void ()>>(std::__function::__policy_storage const*) @ 0xe2b8195 in /usr/lib/debug/usr/bin/clickhouse.debug

10. ./build_docker/./base/base/../base/wide_integer_impl.h:789: ThreadPoolImpl<std::thread>::worker(std::__list_iterator<std::thread, void*>) @ 0xe2b13f3 in /usr/lib/debug/usr/bin/clickhouse.debug

11. ./build_docker/./contrib/llvm-project/libcxx/include/__memory/unique_ptr.h:302: void* std::__thread_proxy[abi:v15000]<std::tuple<std::unique_ptr<std::__thread_struct, std::default_delete<std::__thread_struct>>, void ThreadPoolImpl<std::thread>::scheduleImpl<void>(std::function<void ()>, long, std::optional<unsigned long>, bool)::'lambda0'()>>(void*) @ 0xe2b7061 in /usr/lib/debug/usr/bin/clickhouse.debug

12. ? @ 0x8f1b in /usr/lib64/libpthread-2.28.so

13. clone @ 0xf833f in /usr/lib64/libc-2.28.so

(version 23.3.2.37 (official build))

2025.07.17 22:12:52.094227 [ 2124980 ] {} <Error> autoops_workbench.inspection_hardware_exec_host_history_distributed (92f2f288-f098-4e5e-acc3-ad4dede208c4): Detaching broken part /data/server/clickhouse/store/92f/92f2f288-f098-4e5e-acc3-ad4dede208c4/20250703_66576_66576_0 (size: 0.00 B). If it happened after update, it is likely because of backward incompatibility. You need to resolve this manually

2025.07.17 22:12:52.094396 [ 2124980 ] {} <Error> autoops_workbench.inspection_hardware_exec_host_history_distributed (92f2f288-f098-4e5e-acc3-ad4dede208c4): while loading part 20250703_66575_66575_0 on path store/92f/92f2f288-f098-4e5e-acc3-ad4dede208c4/20250703_66575_66575_0: Code: 27. DB::ParsingException: Cannot parse input: expected 'columns format version: 1\n' at end of stream. (CANNOT_PARSE_INPUT_ASSERTION_FAILED), Stack trace (when copying this message, always include the lines below):

0. ./build_docker/./src/Common/Exception.cpp:91: DB::Exception::Exception(DB::Exception::MessageMasked&&, int, bool) @ 0xe1e20b5 in /usr/lib/debug/usr/bin/clickhouse.debug

1. ./build_docker/./contrib/llvm-project/libcxx/include/string:1499: DB::ParsingException::ParsingException<String&>(int, FormatStringHelperImpl<std::type_identity<String&>::type>, String&) @ 0xe2407c4 in /usr/lib/debug/usr/bin/clickhouse.debug

2. ./build_docker/./src/IO/ReadHelpers.cpp:103: DB::throwAtAssertionFailed(char const*, DB::ReadBuffer&) @ 0xe2406c1 in /usr/lib/debug/usr/bin/clickhouse.debug

3. ./build_docker/./src/IO/ReadBuffer.h:68: DB::NamesAndTypesList::readText(DB::ReadBuffer&) @ 0x127e5438 in /usr/lib/debug/usr/bin/clickhouse.debug

4. ./build_docker/./contrib/llvm-project/libcxx/include/list:621: DB::IMergeTreeDataPart::loadColumns(bool) @ 0x1448a338 in /usr/lib/debug/usr/bin/clickhouse.debug

5. ./build_docker/./src/Storages/MergeTree/IMergeTreeDataPart.cpp:623: DB::IMergeTreeDataPart::loadColumnsChecksumsIndexes(bool, bool) @ 0x14489c6a in /usr/lib/debug/usr/bin/clickhouse.debug

6. ./build_docker/./src/Storages/MergeTree/MergeTreeData.cpp:0: DB::MergeTreeData::loadDataPart(DB::MergeTreePartInfo const&, String const&, std::shared_ptr<DB::IDisk> const&, DB::MergeTreeDataPartState, std::mutex&) @ 0x14507df5 in /usr/lib/debug/usr/bin/clickhouse.debug

7. ./build_docker/./src/Storages/MergeTree/MergeTreeData.cpp:1440: void std::__function::__policy_invoker<void ()>::__call_impl<std::__function::__default_alloc_func<DB::MergeTreeData::loadDataPartsFromDisk(ThreadPoolImpl<ThreadFromGlobalPoolImpl<false>>&, unsigned long, std::queue<std::vector<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>, std::allocator<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>>>, std::deque<std::vector<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>, std::allocator<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>>>, std::allocator<std::vector<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>, std::allocator<std::shared_ptr<DB::MergeTreeData::PartLoadingTree::Node>>>>>>&, std::shared_ptr<DB::MergeTreeSettings const> const&)::$_19, void ()>>(std::__function::__policy_storage const*) @ 0x14594b44 in /usr/lib/debug/usr/bin/clickhouse.debug

8. ./build_docker/./base/base/../base/wide_integer_impl.h:789: ThreadPoolImpl<ThreadFromGlobalPoolImpl<false>>::worker(std::__list_iterator<ThreadFromGlobalPoolImpl<false>, void*>) @ 0xe2b5625 in /usr/lib/debug/usr/bin/clickhouse.debug

9. ./build_docker/./src/Common/ThreadPool.cpp:0: void std::__function::__policy_invoker<void ()>::__call_impl<std::__function::__default_alloc_func<ThreadFromGlobalPoolImpl<false>::ThreadFromGlobalPoolImpl<void ThreadPoolImpl<ThreadFromGlobalPoolImpl<false>>::scheduleImpl<void>(std::function<void ()>, long, std::optional<unsigned long>, bool)::'lambda0'()>(void&&)::'lambda'(), void ()>>(std::__function::__policy_storage const*) @ 0xe2b8195 in /usr/lib/debug/usr/bin/clickhouse.debug

10. ./build_docker/./base/base/../base/wide_integer_impl.h:789: ThreadPoolImpl<std::thread>::worker(std::__list_iterator<std::thread, void*>) @ 0xe2b13f3 in /usr/lib/debug/usr/bin/clickhouse.debug

11. ./build_docker/./contrib/llvm-project/libcxx/include/__memory/unique_ptr.h:302: void* std::__thread_proxy[abi:v15000]<std::tuple<std::unique_ptr<std::__thread_struct, std::default_delete<std::__thread_struct>>, void ThreadPoolImpl<std::thread>::scheduleImpl<void>(std::function<void ()>, long, std::optional<unsigned long>, bool)::'lambda0'()>>(void*) @ 0xe2b7061 in /usr/lib/debug/usr/bin/clickhouse.debug

12. ? @ 0x8f1b in /usr/lib64/libpthread-2.28.so

13. clone @ 0xf833f in /usr/lib64/libc-2.28.so

(version 23.3.2.37 (official build))

2025.07.17 22:12:52.094516 [ 2124980 ] {} <Error> autoops_workbench.inspection_hardware_exec_host_history_distributed (92f2f288-f098-4e5e-acc3-ad4dede208c4): Detaching broken part /data/server/clickhouse/store/92f/92f2f288-f098-4e5e-acc3-ad4dede208c4/20250703_66575_66575_0 (size: 0.00 B). If it happened after update, it is likely because of backward incompatibility. You need to resolve this manually

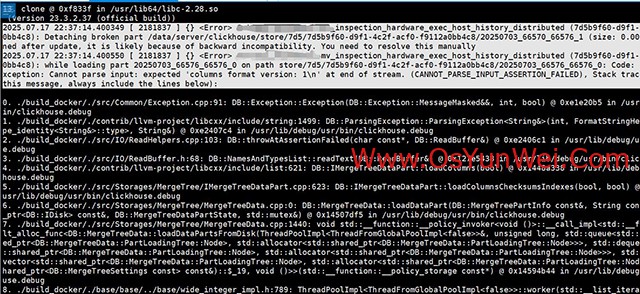

2025.07.17 22:12:52.201580 [ 2123926 ] {} <Error> Application: Caught exception while loading metadata: Code: 231. DB::Exception: Suspiciously many (167 parts, 0.00 B in total) broken parts to remove while maximum allowed broken parts count is 100. You can change the maximum value with merge tree setting 'max_suspicious_broken_parts' in <merge_tree> configuration section or in table settings in .sql file (don't forget to return setting back to default value): Cannot attach table `autoops_workbench`.`inspection_hardware_chart_history_distributed` from metadata file /data/server/clickhouse/store/9dc/9dc2573a-8292-4472-8e7f-8fe17c7e9b0b/inspection_hardware_chart_history_distributed.sql from query ATTACH TABLE autoops_workbench.inspection_hardware_chart_history_distributed UUID '7b6c30a1-91cb-458b-ae03-5acda452c66a' (`host_id` String, `exec_id` String, `insp_type` String, `insp_item` String, `insp_item_name` String, `insp_item_status` String, `insp_item_value` String, `insp_item_unit` String, `insp_datetime` DateTime, `insp_date` Date, `pool_id` String) ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/inspection_hardware_chart_history_distributed', '{replica}') PARTITION BY toYYYYMM(insp_datetime) ORDER BY (host_id, insp_datetime) SETTINGS index_granularity = 8192. (TOO_MANY_UNEXPECTED_DATA_PARTS), Stack trace (when copying this message, always include the lines below):

0. ./build_docker/./src/Common/Exception.cpp:91: DB::Exception::Exception(DB::Exception::MessageMasked&&, int, bool) @ 0xe1e20b5 in /usr/lib/debug/usr/bin/clickhouse.debug

1. ./build_docker/./contrib/llvm-project/libcxx/include/string:1499: DB::Exception::Exception<unsigned long&, String, DB::SettingFieldNumber<unsigned long> const&>(int, FormatStringHelperImpl<std::type_identity<unsigned long&>::type, std::type_identity<String>::type, std::type_identity<DB::SettingFieldNumber<unsigned long> const&>::type>, unsigned long&, String&&, DB::SettingFieldNumber<unsigned long> const&) @ 0x14514c7c in /usr/lib/debug/usr/bin/clickhouse.debug

2. ./build_docker/./src/Storages/MergeTree/MergeTreeData.cpp:0: DB::MergeTreeData::loadDataParts(bool) @ 0x145137a2 in /usr/lib/debug/usr/bin/clickhouse.debug

3. ./build_docker/./src/Storages/StorageReplicatedMergeTree.cpp:0: DB::StorageReplicatedMergeTree::StorageReplicatedMergeTree(String const&, String const&, bool, DB::StorageID const&, String const&, DB::StorageInMemoryMetadata const&, std::shared_ptr<DB::Context>, String const&, DB::MergeTreeData::MergingParams const&, std::unique_ptr<DB::MergeTreeSettings, std::defa

#从日志中可以看到这几条信息

2025.07.17 22:12:52.094516 [ 2124980 ] {} <Error> autoops_workbench.inspection_hardware_exec_host_history_distributed (92f2f288-f098-4e5e-acc3-ad4dede208c4): Detaching broken part /data/server/clickhouse/store/92f/92f2f288-f098-4e5e-acc3-ad4dede208c4/20250703_66575_66575_0 (size: 0.00 B). If it happened after update, it is likely because of backward incompatibility. You need to resolve this manually

2025.07.17 22:12:52.201580 [ 2123926 ] {} <Error> Application: Caught exception while loading metadata: Code: 231. DB::Exception: Suspiciously many (167 parts, 0.00 B in total) broken parts to remove while maximum allowed broken parts count is 100. You can change the maximum value with merge tree setting 'max_suspicious_broken_parts' in <merge_tree> configuration section or in table settings in .sql file (don't forget to return setting back to default value): Cannot attach table `autoops_workbench`.`inspection_hardware_chart_history_distributed` from metadata file /data/server/clickhouse/store/9dc/9dc2573a-8292-4472-8e7f-8fe17c7e9b0b/inspection_hardware_chart_history_distributed.sql from query ATTACH TABLE autoops_workbench.inspection_hardware_chart_history_distributed UUID '7b6c30a1-91cb-458b-ae03-5acda452c66a' (`host_id` String, `exec_id` String, `insp_type` String, `insp_item` String, `insp_item_name` String, `insp_item_status` String, `insp_item_value` String, `insp_item_unit` String, `insp_datetime` DateTime, `insp_date` Date, `pool_id` String) ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/inspection_hardware_chart_history_distributed', '{replica}') PARTITION BY toYYYYMM(insp_datetime) ORDER BY (host_id, insp_datetime) SETTINGS index_granularity = 8192. (TOO_MANY_UNEXPECTED_DATA_PARTS), Stack trace (when copying this message, always include the lines below):

3、日志分析

3.1ClickHouse 检测到一个损坏的 part,路径为:

/data/server/clickhouse/store/92f/92f2f288-f098-4e5e-acc3-ad4dede208c4/20250703_66575_66575_0

该 part 的大小为 0 字节,说明数据不完整或损坏,导致ClickHouse无法启动。

ClickHouse 自动 detach(分离)了这个 part,但不会自动删除。

3.2有太多损坏的parts

2025.07.17 22:12:52.201580 [ 2123926 ] {} <Error> Application: Caught exception while loading metadata: Code: 231. DB::Exception: Suspiciously many (167 parts, 0.00 B in total) broken parts to remove while maximum allowed broken parts count is 100. You can change the maximum value with merge tree setting 'max_suspicious_broken_parts' in <merge_tree> configuration section or in table settings in .sql file (don't forget to return setting back to default value)

ClickHouse 在加载表时发现 167 个损坏的 part。

默认最多允许 100 个损坏的 part,超过后会 拒绝加载表,导致ClickHouse无法启动。

4、解决办法

4.1删除损坏的part

rm -rf /data/server/clickhouse/store/92f/92f2f288-f098-4e5e-acc3-ad4dede208c4/20250703_66575_66575_0

4.2修改配置文件,增大允许的损坏 part 数量

默认值是100,我们坏掉了167个,现在修改为200个,大于167

注意:修改后记得在问题解决后恢复为默认值 100,避免长期容忍大量损坏数据

vi /etc/clickhouse-server/config.xml

<merge_tree>

<max_suspicious_broken_parts>200</max_suspicious_broken_parts>

</merge_tree>

:wq! #保存退出

chmod 755 /etc/clickhouse-server -R #设置权限

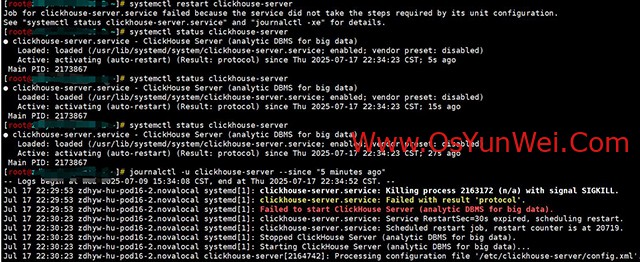

4.3重启clickhouse服务

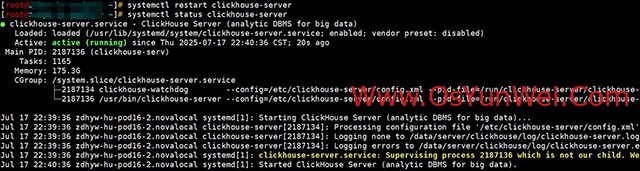

systemctl restart clickhouse-server

服务启动成功

5、验证集群

clickhouse-client --password #登录客户端

DESCRIBE TABLE autoops_workbench.mv_inspection_hardware_exec_host_history_distributed; #查看表结构

SELECT count(*) FROM mv_inspection_hardware_exec_host_history_distributed; #查看表数据

6、检查表是否使用副本(ReplicatedMergeTree):

SHOW CREATE TABLE autoops_workbench.mv_inspection_hardware_exec_host_history_distributed;

集群是5节点5个副本的,刚才删掉的part数据会从其他正常的副本同步回来,无需担心数据丢失。

我们使用了副本机制(ReplicatedMergeTree),ClickHouse 会在后台从其他副本同步数据,确保数据一致性。

至此,clickhouse集群硬件故障导致节点无法启动修复完成。

②190706903

②190706903  ③203744115

③203744115